One of my goals with this blog series is to provide a path for people to see how to go from 'High, Medium and Low' risk ratings to data-driven risk assessments.

One of my goals with this blog series is to provide a path for people to see how to go from 'High, Medium and Low' risk ratings to data-driven risk assessments.

You will learn how to develop Cyber Risk Rating Tables and measure how good they are at assessing your organization's risk. We will cover the following:

- Building a threat library and risk rating tables

- Utilizing OSINT to evaluate forecasts

- Reporting risk forecast accuracy

Standardizing Terminology

As a first step, we need to standardize the way that we talk about things. This has implications later in the process because if we want to start comparing data breaches, the way you categorize them matters a lot. So, having a standard risk language is absolutely critical to that. FAIR is probably the best way you can go with standardizing terminology. Let’s spend some time focusing on standardizing that language and using FAIR for the threat side of it: the Threat Event Frequency (TEF) value and the Threat Capability (TCap) value.

Building a Threat Library

In step two, we start talking about building a threat library. A while ago, I had insight from an Intel article about building a threat agent library. This is a great way to start standardizing the way we talk about bad guys within a firm and probably within an industry. It also goes very far as we start standardizing risk language. When we say 'nation-states', it’s a phrase that exists in the industry, but what does it mean when we talk about it within our firm? That's really what building a threat agent library is all about.

It’s about describing who is either attacking us or who could make mistakes within our organization and assigning them to threat actor profiles. I would suggest building profile cards. By doing this, you build this view of what are the different things that they are interested in, what can they bring to bear in terms of skill sets and resources and what are they trying to do. That really allows you to bring the risk and threat functions within a corporation together, as they spend time thinking about what they need to analyze risk.

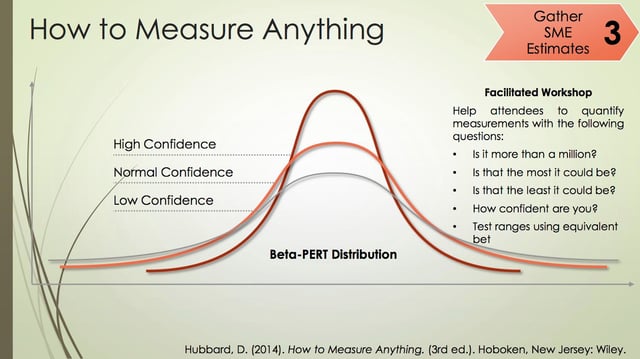

Most organizations don't have such a rating table, that includes a description of threat actor capabilities and the possible frequency of attacks. We talk about this in the FAIR book as well. You can create a rating table by gathering SME estimates using a facilitated workshop where you estimate ranges using min, max and most likely values. This will help in collecting data around otherwise subjective feeling to how often we're being attacked. This is one way that you can go.

An alternative way of going about this is to make assumptions regarding threat actor profiles and then ask SMEs to validate them. That's usually how it's done in a lot of organizations: you take a stab, then ask the SME: “how good are these estimates compared to what you are seeing?” You can go at this in various ways. I personally think that it's helpful to look at ratings in a couple of different ways and ask people that are doing the estimating and data modeling, “does it have to be a normal curve of min, max, most-likely?” There might be other types of distributions that might be a better fit, especially for loss values.

Or you could directly leverage threat libraries and loss tables that commercial software such as RiskLens provide out of the box and customize them as necessary.

In my next post, I will talk about how data aquisition and data coding can aid in cyber-risk forecasting.