CISOs Have an AI Problem. Here’s the Way Out

“As a result of AI, the relationship between the security and risk team and the business has deteriorated very materially…In more than half the conversations I have with non-security executives, the answer is please don’t tell the security people what we are doing [with AI], they’re going to slow us down.”

–Omar Khawaja, CISO at Databricks

At the recent 2024 FAIR Conference, Omar and fellow panelists Jacqueline Lebo, Risk Advisory Manager, Safe Security and Arun Palmumati, Sr. Staff Field Engineer, Databricks, diagnosed just why security teams stall for time when the business pushes ahead on AI - and how CISOs can start to get up to speed on the AI revolution.

Watch a replay of the FAIRCON24 session:

Navigating the Complexities of Assessing and Managing AI Risk

What CISOs Don’t Know about AI

AI is coming to business “at a pace we have not seen before” for a new technology Arun said and, Jackie added, “exacerbating existing problems like data governance.”

AI is coming to business “at a pace we have not seen before” for a new technology Arun said and, Jackie added, “exacerbating existing problems like data governance.”

But worse, Omar explained, of the four subsystems that make up an AI system, “security teams know almost nothing about” data and models, and “know a little about” governance and applications. As a result, “our instincts don’t kick in when it comes to AI…“That’s not the CISO’S fault. AI took us all by surprise.”

The FAIR Institute’s FAIR-AIR Model to Demystify AI

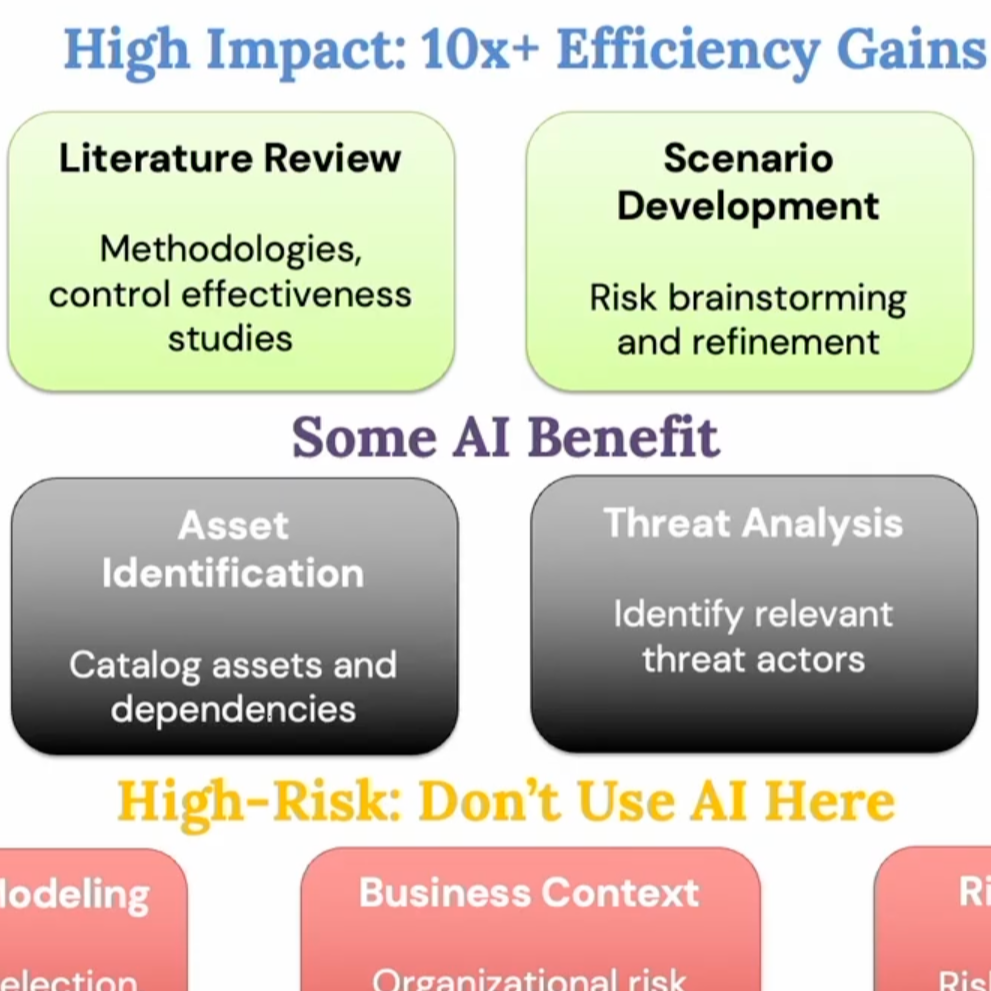

Starting a year ago, the FAIR Institute heard feedback from the community that risk management for AI “was just too hard so they had to say no,” Jackie said. The Institute set up a working group to tackle the issue. “We wanted to give them tools to partner with the business.”

In true FAIR fashion, the group began by breaking down the AI challenge into components to “demystify AI architecture,” Arun said, defining the components, then moving on to identify the risks impacting each.

The result was the FAIR-AIR model. The team advanced the model by mapping MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) and NIST RMF to FAIR-AIR.

Omar Khawaja, Jacqueline Lebo, Arun Palmumati at FAIRCON24

Insights for Security and Risk Teams from the FAIR Institute’s AI Experts

–To get value from AI, you run your data through an AI model; the tradeoff is the risk of compromise or exposure of your data. So the ultimate security issue is protecting your data.

–”As a result of AI, every organization's risk appetite has materially increased but no one has updated their risk appetite documents,” Omar said. “They can’t measure it so they can’t understand it.” Efforts like development of FAIR-AIR will ultimately “bring tradecraft up” to meet the challenge.

–”Create an express lane,” Omar recommended. Define for the business a set of best practices approved by the security team. Most business leaders would be happy to stick to a “use case within the guardrails.”

–”Just know that you can quantify AI risk,” Jackie said. “You can be a business partner…It’s just going to take a minute to slow down and mobilize the people you need to learn from within your teams, then work to get things securely in place.”

Watch a replay of the FAIRCON24 session:

Navigating the Complexities of Assessing and Managing AI Risk