Managing risk professionally means managing our own cognitive biases to effectively represent the risk facing our organizations. Overcoming the biases that each one of us brings to an analysis is a challenge and the only way to effectively manage this is by being actively aware of our own limitations in our perception of reality.

Managing risk professionally means managing our own cognitive biases to effectively represent the risk facing our organizations. Overcoming the biases that each one of us brings to an analysis is a challenge and the only way to effectively manage this is by being actively aware of our own limitations in our perception of reality.

It’s for this reason that I was very interested in the research behind an article from The Conversation that recently made the rounds on social media, Why Your Brain Never Runs out of Problems to Find by psychology post-doc David Levari of the Harvard Business School.

In this article, Levari talks about some phenomena his group has been studying about how some problems never seem to go away; or basically how once one obstacle has been resolved another quickly raises its head and takes its place. Not new problems mind you, but variations of the same existing problem.

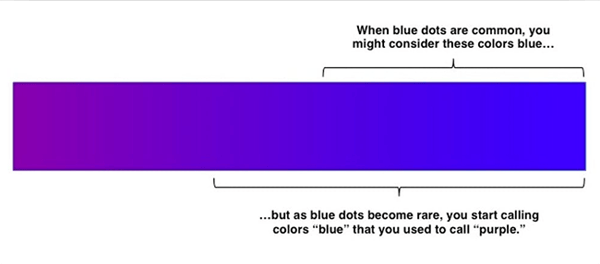

Levari and his colleagues studied this “concept creep” by having volunteers rate the degree of threat posed by varying faces. What the researchers discovered was that as the population of intentionally intimidating faces was removed from the pool, the volunteers began calling non-intimidating faces threatening. In other words, faces that were previously determined to be safe were now being called high risk. They repeated this experiment using dots in shades of blue and purple. Do people’s perception of “blue” change based upon how often they see it?. Turns out the less real blue one saw, the more purple started looking like blue.

Graphic from The Conversation

Do we experience cyber threats the same way? Are we never going to run out of cybersecurity problems because as we solve more, more of what used to be benign starts to look scary? What happens to professionals who move from an environment of high frequency cyber incidents to one for which that is far more rare?

Thoughtful risk analysis should help cool the fervor of seeing threat events everywhere. I think this is especially helpful in cybersecurity where we experience a relatively low frequency of loss events (generically speaking). Sure, there are high profile breach events and although they seem frequent, efforts to annualize these (such as for input into a FAIR analysis) tends to drive a low occurrence of loss (this is especially true when slicing event data by industry).

Not to be deterred, our subjective brains begin to substitute non-loss events as examples of loss (Levari’s concept creep). We may even justify this by calling them “near misses” and postulating about how bad it could have been but for a lucky break or a good control.

Levari sums up his article by asking how we can make more consistent decisions. For sure, consistent decision making should be a high priority for any risk analysis program. FAIR helps in this regard by enabling you to apply a consistent model for cyber risk and a fixed taxonomy with well established relationships between the variables.

However, garbage in, garbage out still applies and that is where Levari’s research helps illuminate our subjective biases. Using clearly defined categories, definitions, and thresholds for threat analysis allows us to not see danger in every shadow and makes for a high-quality risk analysis.

Jack Freund is co-author with Jack Jones of the book Measuring and Managing Information Risk: A FAIR Approach, the pioneering work on cyber risk quantification. Read more by Jack Freund.

Some 3,000-plus members of the FAIR Institute share knowledge on quantifying cyber risk, and the movement is spreading: The influential consulting group Gartner recently declared cyber risk quantification a must-have for integrated risk management.