Webinar: Intro to the NIST AI RMF (FAIR Followers Have a Head Start)

The FAIR Institute recently hosted a webinar with Martin Stanley, leader of the research and development program for the Cybersecurity and Infrastructure Security Agency (CISA/DHS), on loan to the National Institute of Standards (NIST) and Technology to advance the new NIST Artificial Intelligence Risk Management Framework (AI RMF)

The FAIR Institute recently hosted a webinar with Martin Stanley, leader of the research and development program for the Cybersecurity and Infrastructure Security Agency (CISA/DHS), on loan to the National Institute of Standards (NIST) and Technology to advance the new NIST Artificial Intelligence Risk Management Framework (AI RMF)

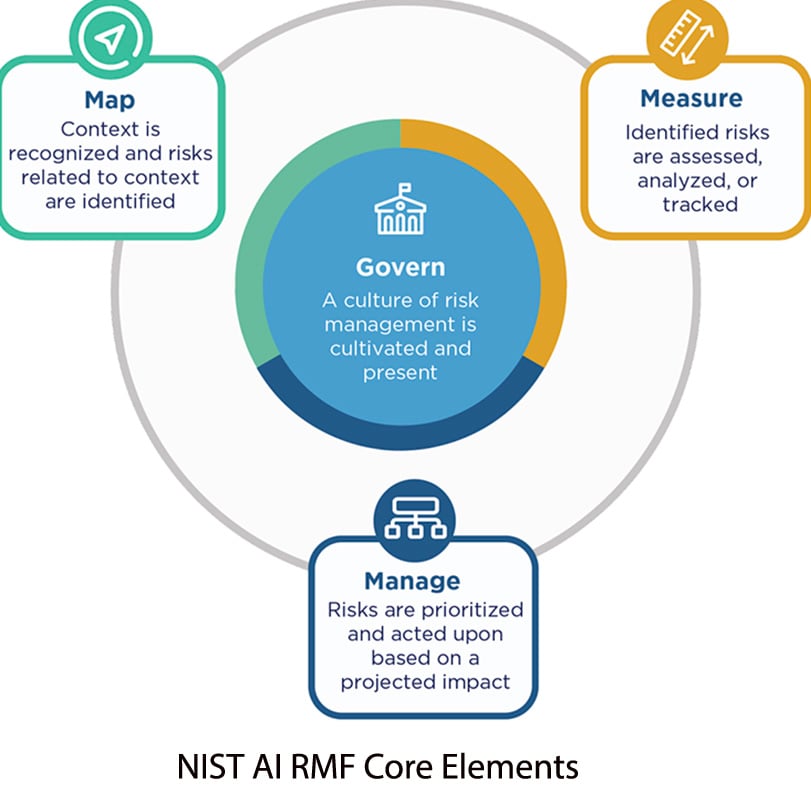

If you’re expecting this latest NIST cybersecurity framework to be like the NIST CSF – implement this technical list of controls to achieve maturity – well, this is not your traditional RMF. On the other hand, if you’re a FAIR practitioner used to thinking in terms of risk scenarios and probabilistic outcomes, you’re already in tune with NIST’s advice on managing artificial intelligence risk.

Watch the webinar:

The NIST Artificial Intelligence Risk Management Framework (AI RMF)

Also visit NIST’s AI RMF website to see the framework and especially the action-oriented AI Playbook.

The AI RMF starts with the premise that artificial intelligence technologies are “socio-technical”, born out of a complex mix of human and machine interaction. “We need to expand the community involved in the risk management process,” Martin said. “It’s not enough to have conversations with the CISO.” If you're looking for an ATO before deployment, he said, you are way behind the curve – people in your organization are already deploying AI in ways you probably don’t know and those are the people you need to talk to.

“Context matters greatly,” he said. “Trying to bucketize risks and measure them in a specific way in every context of use is probably more likely to cause you to miss impact than anything else.” So, hello risk scenarios.

“Context matters greatly,” he said. “Trying to bucketize risks and measure them in a specific way in every context of use is probably more likely to cause you to miss impact than anything else.” So, hello risk scenarios.

Also new to the traditional mentality: “In cybersecurity, we’re used to fixed measurements and standard outputs. That’s out the window when it comes to AI systems that are probabilistic in nature…with different outputs on similar queries.” Hello, Monte Carlo distributions.

More than ever before, infosec practitioners will need to balance risk and reward. “We are going to have risks that we need to manage that we are not going to be able to measure or identify. That’s where an opportunity conversation comes into play” with AI system users.

Ultimately, what good looks like in AI risk management is “building that risk-aware culture, getting a governance function correct, having a good inventory of use cases for the organization and having the right people at the table.”

Listen to Martin Stanley speak on AI risk management -- be sure to catch the Q&A at the end where the audience asks questions that FAIR practitioners want answered.