Learn How FAIR Can Help You Make Better Business Decisions

Register today

by Jack Jones

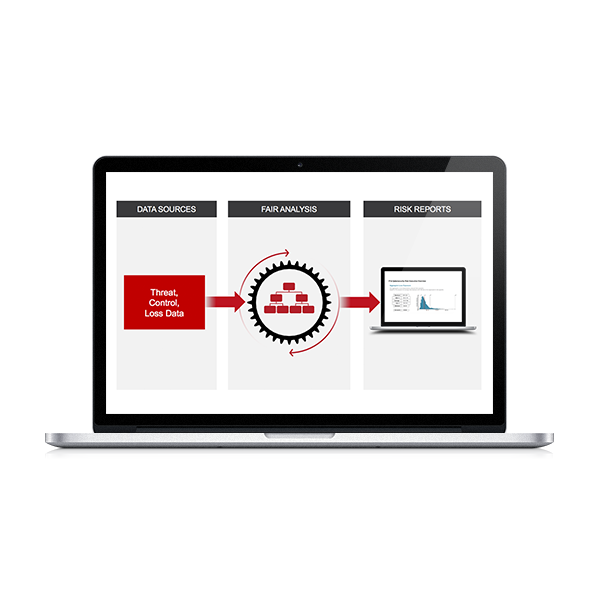

With the advent of FAIR, organizations finally have a risk model that enables effective cyber risk measurement (quantification). The other necessary part of the equation, of course, is data. The good news on that score is three-fold:

Of the three, the consensus is that it is preferable to limit reliance on subject matter expert estimates whenever possible due to the potential for cognitive bias and other human-related failings. Furthermore, the analysis process can be streamlined when good data exists and is appropriately normalized to the risk model.

Besides, it simply makes sense to leverage security technology and process data when and where it exists. As a result, this white paper will provide guidance and examples to help organizations improve their FAIR-based risk analyses using these data sources.

This white paper was developed as part of the work performed by the data integration workgroup of the FAIR Institute and is available to all FAIR Institute members. You can become a member of the FAIR Institute (membership is free) and sign up for the data integration workgroup here.