In my career as an IT auditor, there were a few questions I struggled to answer when communicating with clients and peers.

In my career as an IT auditor, there were a few questions I struggled to answer when communicating with clients and peers.

-

Why is one control labeled High Risk and another control labeled Low Risk?

-

How does a control failure/exception impact the audit opinion?

-

Outside the scope of the audit, why should we remediate this control exception?

After going through FAIR Fundamentals Training, the FAIR Analyst Learning Path, and seeing FAIR's practical application across a number of Fortune 1000 companies, I've reflected on a few ways that FAIR can help address those tricky IT audit questions with quantitative (and defensible) concepts.

Why is one control labeled high-risk and another control labeled low-risk?

Scoping any audit begins with an initial risk assessment to evaluate the risks associated with processes and the controls in place to address those risks. Once risks and controls are identified, the auditor assigns a risk rating to assess the risk of failure of each control.

When going through this process in the past, I asked myself questions like: "If this control fails, how will it impact the process?" Or when performing a SOC 1 or SOC 2, "if this control fails, how will it affect the ability to achieve the control objective or criteria?" After asking these questions, I would assign a qualitative risk rating like "High" or "Low".

But when it was time to defend my risk assessment, these qualitative ratings made things difficult. I assigned qualitative ratings based on my own mental model. Sure, I used experience and sound assumptions to inform that mental model, but I found that my assumptions were, at times, very different than that of my peers, partners, or clients.

If a reviewing partner asked why I rated a risk of failure as Low when they thought it was High, how could I defend my work qualitatively? If a client asked why I asked them to produce more testing samples for a high-risk control, how could I defend my decision qualitatively? The unspoken mental models behind these qualitative ratings make them difficult to defend no matter how much support exists.

How does a control failure/exception impact the audit opinion?

How does a control failure/exception impact the audit opinion?

Another issue I faced as an auditor was trying to determine the impact of control exceptions and, ultimately, deciding how they might impact the audit opinion. This builds off the qualitative High Risk vs. Low Risk issue above.

After identifying an audit finding, I would naturally revisit my risk assessment from the initial scoping exercise. But how High is that high risk? If a High risk control fails, how does that affect the audit opinion? On the other hand, if there were multiple Low risk control failures, how do they affect the audit opinion? How many Lows equal one High? Does Low plus Low equal High?

OK, you get the idea.

The initial risk assessment of High or Low doesn't help much when it comes to evaluating a control exception. There are so many unspoken assumptions that go into the initial risk assessment and there are other factors to consider when evaluating a control failure.

Since the initial risk assessment doesn't help, I went back to my mental model to determine how the control exception affects the audit. But this process raises the same questions as above. What if my peers, partners, or clients disagree with my assessment? Now, the stakes are higher. This isn't just an initial risk assessment we're debating. This could be the difference between an unqualified opinion and a qualified opinion in the audit report. Anyone who has performed an audit in the past understands the gravity of these discussions and, understandably, the sensitivity around these conversations.

Outside the scope of the audit, why should we remediate this control exception?

Now, let's say we made it through the discussion above. The control exceptions will be reported in the final audit report, but the overall opinion will be unqualified. Other than the fact that the exceptions need to be addressed as part of the audit, how do we evaluate the need to remediate? Clients likely have constraints in terms of time and resources available to dedicate to remediating audit findings.

In addition, this isn't the only audit they have to endure. Especially in highly regulated industries like banking, healthcare, and insurance, risk and compliance departments are faced with audit overload. You identified five control exceptions in the audit and you recommend remediating all five. How does your client know where to start? What is the risk if they don't remediate all the findings? What should they prioritize?

Qualitatively, these questions are difficult to answer. Again, I would revert to my individual mental model in the absence of an accepted framework to inform decisions.

The good news is that FAIR can fill that void.

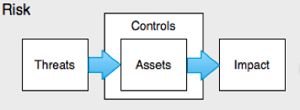

If the examples above resonate with you, you're probably wondering how FAIR can help. The first step is to scope scenarios in FAIR terms by identifying the asset, threat, and effect. For example, when considering a patch management control, think about the reasons this control is being tested in the first place. Ultimately, you're probably concerned with the confidentiality or integrity of data, or the availability of a key system.

If the examples above resonate with you, you're probably wondering how FAIR can help. The first step is to scope scenarios in FAIR terms by identifying the asset, threat, and effect. For example, when considering a patch management control, think about the reasons this control is being tested in the first place. Ultimately, you're probably concerned with the confidentiality or integrity of data, or the availability of a key system.To demonstrate, let's say you're worried about the following:

Asset: PII in a Crown Jewel Database

Threat: Cyber Criminal exploiting vulnerabilities caused by poor patch management

Impact: Confidentiality

Scoping this scenario in FAIR terms will allow you to quantify the scenario and understand risk exposure in financial terms. This blog post from RiskLens will take you through the rest of the process: Reacting to IT Audit Findings? Get Ahead of Them with Cyber Risk Quantification!

By quantifying risk in financial terms you can start to address these previously qualitative issues with quantitative (and defensible) results.

We know that as long as humans are involved in audits, there will always be a level of subjectivity. The same is true when practicing FAIR. We consider subjectivity and objectivity on a spectrum. As we quantify risks and drive more data into risk analyses, we drive more objectivity into those analyses, which, in turn, helps to inform and defend decisions.

For auditors and compliance professionals, FAIR provides a way to drive more objectivity and facilitate clearer conversations around common audit issues. Ultimately, audit stakeholders (like CFOs and the Board) speak in financial terms, so why shouldn't we?