The last post examined how threat intelligence fits within the risk management process. This one focuses in on how intelligence drives risk assessment and analysis – a critical phase within the overall risk management process.

If there’s one thing I’ve learned about assessing risk over the years, it’s this: creativity will always fill the void of uncertainty. A second, related lesson is that data *is* the plural form of anecdote to most people most of the time.

If there’s one thing I’ve learned about assessing risk over the years, it’s this: creativity will always fill the void of uncertainty. A second, related lesson is that data *is* the plural form of anecdote to most people most of the time.

In other words – people are great at making $#@!% up. And let’s be honest – hopping a Trolley ride through Mr. Roger’s Neighborhood of Make Believe Risks is a lot more fun than dealing with the realities of uncertainty and ambiguity.

The unfortunate outcome of these tendencies is that many risk assessments become a session of arbitrarily assigning frequency and impact colors to all sorts of bad things conceived by an interdepartmental focus group rather than a rational information-driven exercise. Aside from the entertainment value of watching people argue about whether yellow*yellow equals orange or red, this isn’t a great recipe for success. And thus, we all-too-often underestimate the important risks and overestimate the unimportant ones. See Doug Hubbard’s The Failure of Risk Management for more on this topic.

The unfortunate outcome of these tendencies is that many risk assessments become a session of arbitrarily assigning frequency and impact colors to all sorts of bad things conceived by an interdepartmental focus group rather than a rational information-driven exercise. Aside from the entertainment value of watching people argue about whether yellow*yellow equals orange or red, this isn’t a great recipe for success. And thus, we all-too-often underestimate the important risks and overestimate the unimportant ones. See Doug Hubbard’s The Failure of Risk Management for more on this topic.

Risky questions deserving intelligent answers

Clearly a more “intelligent” approach is needed for analyzing information risk. When tackling various issues or problems, I almost always try to start with a set of interesting questions. This probably harkens back to my scientific background, where simple questions pave the way for more formal hypotheses, experimental design, data collection, etc. Thought experiments like the one we’re conducting here are less rigorous than those done in a lab, but formulating questions is still a useful exercise. In that spirit, here’s a (not exhaustive) list of questions risk assessors/analysts have that I think threat intelligence can help answer.

| – What types of threats exist? – Which threats have occurred? – How often do they occur? – How is this changing over time? – What threats affect my peers? – Which threats could affect us? – Are we already a victim? – Who’s behind these attacks? – Would/could they attack us? – Why would they attack us? – Are we a target of choice? – How would they attack us? |

– Could we detect those attacks? – Are we vulnerable to those attacks? – Do our controls mitigate that vulnerability? – Are we sure controls are properly configured? – What happens if controls do fail? – Would we know if controls failed? – How would those failures impact the business? – Are we prepared to mitigate those impacts? – What’s the best course of action? – Were these actions effective? – Will these actions remain effective? |

But how, exactly, can threat intelligence help answer these questions? What frameworks or processes are available? Where does the relevant intelligence come from and in what form does it exist? How do threat intel and risk management teams collaborate to produce meaningful results that drive better decisions? These are the kinds of questions we’ll explore during the rest of this post (and series).

Feeling RANDy, Baby?

There is surprisingly little information I’ve found in the public domain on the topic of using threat intelligence to drive the risk analysis process. There is, however, a paper from the RAND Corporation that goes the opposite way –Using Risk Analysis to Inform Intelligence Analysis. It concludes that “risk analysis can be used to sharpen intelligence products…[and]…prioritize resources for intelligence collection.” I found this diagram especially useful for explaining the interplay between the two processes. It’s well worth reading regardless of which direction you’re traveling on the risk-intelligence continuum.

There is surprisingly little information I’ve found in the public domain on the topic of using threat intelligence to drive the risk analysis process. There is, however, a paper from the RAND Corporation that goes the opposite way –Using Risk Analysis to Inform Intelligence Analysis. It concludes that “risk analysis can be used to sharpen intelligence products…[and]…prioritize resources for intelligence collection.” I found this diagram especially useful for explaining the interplay between the two processes. It’s well worth reading regardless of which direction you’re traveling on the risk-intelligence continuum.

Other recommended quick reads that touch on threat intel and risk analysis include this article from Dark Reading and this one from TechTarget. But neither of those venture into the realm of frameworks or methodologies. If you know of others, feel free to engage @wadebaker or @threatconnect on Twitter. I’ll update this post for the benefit of future readers.

Let’s be FAIR about this

We’ve already reviewed NIST SP 800-39 and ISO/IEC 27005 in this series as prototypical examples of the risk management process. While both of these frameworks (and most others) “cover” risk analysis, Factor Analysis of Information Risk (FAIR) reverse-engineers it and builds it into a practical, yet effective, methodology. Note – Neither I nor ThreatConnect have any stake whatsoever in FAIR. I’ve chosen to reference FAIR because a) it’s open, b) it’s a sound analytical approach and c) it plays well with threat intelligence, and d) it plays well with ISO 27005. Other frameworks could be used, but I don’t think the process would be as intuitive or comprehensive. But your mileage may vary. More info on FAIR is available here, here, and here.

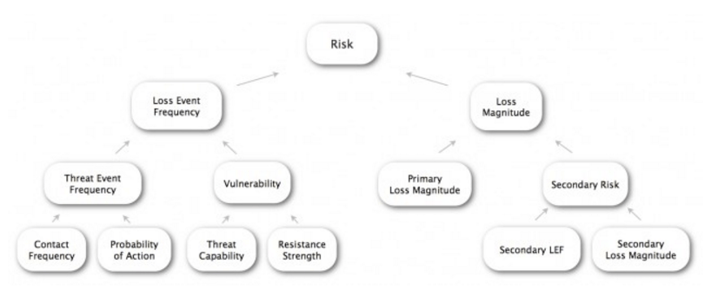

The diagram above represents how FAIR breaks down the broad concept of risk into more discrete factors that can be assessed individually and then modeled in aggregate. The lowest tier will be our focus for infusing intelligence into the risk analysis process. Before we go there, though, it will be helpful to discuss a similar decomposition model for threat intelligence.

Pickin’ up STIX

I’ve long maintained that one of the primary challenges to managing information risk is the dearth of accessible and reliable data to inform better decisions. Correcting this was the primary driver behind Verizon’s Data Breach Investigations Report (DBIR) series. As we studied and reported on more security incidents, we realized that the lack of a common language was one of the key impediments to creating a public repository of risk-relevant data. This lead to creation of the Vocabulary for Event Recording and Incident Sharing (VERIS) and launch of the VERIS Community Database (VCDB). If you’re looking to bridge the worlds of incident response and risk management/analysis, I suggest reviewing those resources.

I’ve long maintained that one of the primary challenges to managing information risk is the dearth of accessible and reliable data to inform better decisions. Correcting this was the primary driver behind Verizon’s Data Breach Investigations Report (DBIR) series. As we studied and reported on more security incidents, we realized that the lack of a common language was one of the key impediments to creating a public repository of risk-relevant data. This lead to creation of the Vocabulary for Event Recording and Incident Sharing (VERIS) and launch of the VERIS Community Database (VCDB). If you’re looking to bridge the worlds of incident response and risk management/analysis, I suggest reviewing those resources.

But this post is about bridging the chasm between threat intelligence and risk analysis. While IR and intel share many commonalities, they also differ in many ways. Similarly, VERIS contains elements that are relevant to the intelligence process, but was never optimized for that discipline. That goal was taken up by The Structured Threat Information eXpression (STIX™), a community effort lead by DHS and MITRE to define and develop a language to represent structured threat information. The use of STIX has grown a lot over the last several years, and it has now transitioned to OASIS for future oversight and development. It’s also worth noting that a good portion of the STIX incident schema was derived from VERIS, which is now a recognized (often default) vocabulary within STIX. There’s also a script for translating between the two schemas, but I can’t seem to locate it (help me out, STIX peeps!).

The point in bringing this up is that if you’re looking for threat intelligence to drive risk analysis, learning to speak STIX is probably a good idea.

What about the Diamond?

Thanks for asking. Yes, the Diamond Model for Intrusion Analysis, which we talk about a lot here at ThreatConnect, is definitely a threat intelligence model. But it is a process for doing threat intelligence rather than a language or schema. Because of this, the Diamond Model and STIX are complimentary rather than competitive. The STIX data model maps quite well into the Diamond, a subject we’ll explore another time. For now, suffice it to say that using FAIR, STIX, VERIS, VCDB, DBIR, and the Diamond might sound like crazy talk, but it’s perfectly sane. Ingenious even.

A FAIR-ly intelligent approach

With all of that background out of the way, we’re at the point where the rubber finally hits the road. The first thing I’d like to do is identify risk factors in FAIR that can be informed by threat intelligence. To do that, I’ll use a modified version of the FAIR diagram shown earlier. Orange stars mark risk factors where intelligence plays a key role in the analysis process; grey represents a minor or indirect relationship.

Next, I’ll attempt to create a mapping between these FAIR factors and STIX data model constructs, which lays the groundwork for intelligence-driven risk analysis. Before I do that, though, I’d like to mention a few things. First off, I apologize for the rigid and rather dry structure; I couldn’t think of a better way of presenting the necessary information. You’ll notice a lot of redundancy. This is because the relationships between the models are not mutually exclusive; a STIX field can inform multiple FAIR risk factors in different ways. Furthermore, the STIX schema inherently contains many redundant field names across its nine constructs. One final note is that I have not listed every conceivable relevant STIX field for each risk factor, but rather tried to focus on the more direct/important ones. That’s not to say I didn’t miss some that should have been included.

Enough of that – let’s get to it.

Contact Frequency

Relevant STIX fields:

- Threat Actor

- Identity: Identifies the subject of the analysis. This would, for instance, differentiate an external threat actor from a full-time employee or remote contractor.

- Type: While not a specific identity, generic types (e.g., outsider vs insider) still help in determining the likelihood of contact.

- Incident

- Victim: Profiling prior victims may help determine the threat actor’s likelihood of coming into contact with your organization.

- Indicator

- Sightings: Evidence of prior contact with a threat informs assessments of current/future contact.

Probability of Action

Relevant STIX fields:

- Threat Actor

- Motivation: Understanding a threat agents’s motivation helps assess how likely they are to act against your organization.

- Intended_Effect: A threat actor’s typical intent/goals further informs assessments of the likelihood, persistence, and intensity of actions against your organization.

- Incident

- Attributed_Threat_Actors: Useful when searching for intelligence on particular threat actors or groups.

- Victim: Profiling prior victims helps assess a threat actor’s likelihood of targeting your organization.

- Intended_Effect: A threat actor’s intent/goals in prior incidents further informs assessments of the likelihood, persistence, and intensity of actions against your organization.

- Campaign

- Intended_Effect: A threat actor’s intent/goals in prior campaigns further informs assessments of the likelihood, persistence, and intensity of actions against your organization.

- Attribution: Useful when searching for intelligence on particular threat actors or groups.

- Exploit Target

- Vulnerability: Exploitable vulnerabilities may attract malicious actions against your organization from opportunistic threat actors.

- Weakness: Exploitable security weaknesses may attract malicious actions against your organization from opportunistic threat actors.

- Configuration: Exploitable asset configurations may attract malicious actions against your organization from opportunistic threat actors.

- Indicator

- Sightings: Evidence of prior malicious actions informs assessments of the probability of current/future actions.

Threat Capability

Relevant STIX fields:

- Threat Actor

- Type: The type of threat actor (e.g., a nation-state vs an individual) grants insight into a threat actor’s possible skills and resources.

- Sophistication: Informs assessments of a threat actor’s skill-based capabilities.

- Planning_And_Operational_Support: Informs assessments of a threat actor’s resource-based capabilities.

- Observed_TTPs: The tactics, techniques, and procedures utilized by a threat actor reveal a great deal about their capabilities.

- Intended_Effect: Certain intentions/goals may enable a threat actor to apply more force against a target. For instance, if concealment isn’t necessary, more overt and forceful actions can be taken.

- Incident

- Generally applicable; Studying prior incidents associated with a threat actor informs multiple aspects of capability assessments.

- Campaign

- Generally applicable; Studying campaigns associated with a threat actor informs multiple aspects of capability assessments.

- TTP

- Behavior: The attack patterns, malware, or exploits leveraged by a threat actor directly demonstrate their capabilities.

- Resources: Informs assessments of a threat actor’s resource-based capabilities.

- Exploit_Targets: Identifies vulnerabilities, weaknesses, and configurations a threat actor is capable of exploiting.

- Kill_Chain_Phases: A threat actor’s TTPs for each phase of the Kill Chain offers another lens through which to understand their capabilities. For instance, do they develop their own custom malware for the exploitation phase or reuse commodity kits?

- Exploit Target

- Vulnerability: Identifies specific vulnerabilities a threat actor is capable of exploiting.

- Weakness: Identifies specific security weaknesses a threat actor is capable of exploiting.

- Configuration: Identifies specific asset configurations a threat actor is capable of exploiting.

Resistance Strength

Relevant STIX fields:

- Threat Actor

- Intended_Effect: Certain intentions/goals may render controls ineffective. For instance, if destruction or disruption is the desired effect, disclosure-based controls will offer little resistance.

- TTP

- Kill_Chain_Phases: The phase in the kill chain can inform assessments of resistance strength against various TTPs. For instance, AV software offers little value after the exploitation phase.

- Incident

- Affected_Assets: The compromise of certain assets may may affect the strength of COAs. For instance, knowing the Active Directory server was compromised, lessens the effectiveness of authentication mechanisms. Can also highlight recurring security failures involving particular assets or groups of assets.

- COA_Taken: Knowing what has already been done informs assessments of the incremental value of additional COAs.

- Intended_Effect: Certain intentions/goals may render controls ineffective. For instance, if destruction or disruption is the desired effect, disclosure-based controls will offer little resistance.

- Exploit Target

- Vulnerability: Unpatched vulnerabilities can erase or erode the strength of security controls against threats capable of exploiting them.

- Weakness: Unmitigated security weaknesses can erase or erode the strength of security controls against threats capable of exploiting them.

- Configuration: Poorly configured assets can erase or erode the strength of security controls against threats capable of exploiting them.

- Potential_COAs: May identify previously successful COAs against a threat, thus informing assessments of resistance strength.

- Course of Action

- Type: Different types of COAs can have significantly different effects and strengths.

- Stage: The stage at which COAs occur informs assessment of effort and efficacy. For instance, it’s much harder to resist or remove a threat actor who is deeply entrenched throughout the victim’s environment.

- Objective: Objectives for COAs have a significant effect on resistance strength. For instance, some controls are better able to detect malicious actions than prevent them.

- Impact: Understanding the impact of a COA informs future assessments of resistance strength for that COA as well as other complimentary or compensating COAs.

- Efficacy: Understanding how well a COA met its objective(s) informs future assessments of resistance strength for that COA as well as other complimentary or compensating COAs.

Primary Loss Magnitude

Relevant STIX fields:

- Incident

- Security_Compromise: Distinguishing unsuccessful attempts vs network intrusions vs data disclosures informs impact assessments.

- Affected_Assets: The assets affected in an incident have a direct bearing on impact.

- Impact_Assessment: May contain information or values directly useful for assessing loss magnitude.

Secondary Loss Event Frequency

Relevant STIX fields:

- Threat Actor

- Motivation: Understanding a threat actor’s motives may hint at possible secondary losses. For instance, disgruntled employees may desired to release embarrassing data over time.

- Intended_Effect: Understanding a threat actor’s goals may hint at possible secondary losses. For instance, some threat actors seek to embarrass victims by releasing stolen data publicly, while others may provide that information to other threat actors for a fee.

- Incident

- Intended_Effect: Understanding a threat actor’s goals may hint at possible secondary losses. For instance, some threat actors seek to embarrass victims by releasing stolen data publicly, while others may provide that information to other threat actors for a fee.

- Impact_Assessment: May contain information or values directly useful for assessing secondary loss event frequency.

- Course of Action

- Generally applicable; knowing prior COAs informs assessments of future/secondary loss events.

Wrapping it up

I’d like to reiterate that I don’t view this as a done deal – much the opposite, in fact. One of the things I hope this post prompts is further discussion and refinement on this topic (generally) and this mapping (specifically) by the FAIR and STIX user communities.

This post was originally published on threatconnect.com.