Watch Out for these 5 ‘Cyber Risk Quantification’ Methods. They Don’t Support Cost-Effective Risk Management

As the popularity of cyber risk quantification (CRQ) grows, so grows the confusion in the marketplace about choosing the right cyber risk quantification solution among many with the CRQ label.

To cut through the fog: Yes, assigning a number to a cyber risk is a form of ‘quantification’, but not really different in kind from categorizing a risk as red, yellow or green. It’s not enough to inform business decision-makers on how to run cost-effective risk management. That requires quantification that puts a financial value on cyber risk so it can be prioritized against other risks or run through a cost-benefit analysis.

In his eBook Understanding Cyber Risk Quantification: A Buyer’s Guide (FAIR Institute Contributing Membership required view), Jack Jones, creator of the FAIR standard for cyber and technology risk quantification, evaluates the shortcomings of five of these common numbering systems, while pointing out that they do have some value, but not for quantifying and expressing the “probability and magnitude of cyber-related loss in financial terms,” the basic deliverable of true quantitative risk analysis. Here are the five, along with Jack’s caveats:

1. Numerically expressed ordinal risk measurement

Simple 5x5 scales for probability and impact. The scores are then multiplied to derive a “risk score” (5x2 = 10).

You might use it for: A quick and relatively low effort way to group loss event scenarios.

But the problem is: A “1”-rated loss scenario may be less likely to occur than a “2”-rated -- but by how much? You can’t do math with these ordinal numbers, they’re really expressions of subjective judgments.

Get started in FAIR analysis - or become an expert - with one of the FAIR training courses sanctioned by the FAIR Institute.

2. Controls-focused assessments

Also known as risk-management maturity assessments for NIST CSF, ISO2700x, COBIT and other frameworks that list best controls and processes.

You might use it for: The important task of evaluating whether certain controls are in place and functioning, by assigning a numeric grade.

But the problem is: They don’t measure risk and so can’t give guidance on whether to prioritize one control deficiency over another. (Note: NIST CSF includes FAIR among best practices for risk analysis and risk management.)

3. Vulnerability assessments

3. Vulnerability assessments

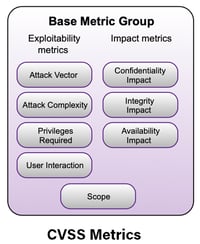

Technologies that most often leverage the Common Vulnerability Scoring System (CVSS) to rate the significance of findings in vulnerability scans.

You might use it for: Identifying weaknesses in your cybersecurity stack, a good and valuable task in cyber risk management.

But the problem is: CVSS does not measure risk – it’s missing key factors for quantitative analysis such as frequency of attack or magnitude of impact.

4. Credit-like scoring

Systems that collect data from scanning technologies and other sources to feed an algorithm that generates a score.

You might use it for: Benchmarking the third parties in your supply chain for security practices, or benchmarking your organization against peers.

But the problem is: They don’t measure how much risk exists. A score of 750 implies less risk than a score of 550 but not in quantifiable loss exposure. Also, these solutions typically only can access internet-facing applications (like SSL certificates) and may miss significant parts of the organization’s risk landscape.

5. Threat analysis

Models such as DREAD18 and STRIDE19 formalize an organization’s ability to evaluate its threat landscape.

You might use it for: Identifying and mitigating vulnerable conditions in software, technology architecture, and processes. For CRQ analyses, it also can provide useful insights into the threat event frequency and threat capability variables (see this blog post: How to Use DREAD with FAIR).

But the problem is: In their focus on threats and vulnerabilities, they leave out other critical risk factors. They tend to focus on cyber attacks and not consider non-malicious events, such as human errors. And in the end, like other methods to watch out for, they rely on ordinal values, and the unreliable practice of using math on those values.

As Jack says in Understanding Cyber Risk Quantification: A Buyer’s Guide...

As Jack says in Understanding Cyber Risk Quantification: A Buyer’s Guide...For more advice and guidance from the creator of the international standard for cyber risk quantification – download the CRQ buyer’s guide now.