FAIR Cyber Risk Analysis for AI Part 2: Increased Sophistication of Phishing Attempts

In this blog post series, we’re examining how artificial intelligence (AI) is rapidly reshaping the threat landscape, and how to approach quantifying the resulting loss metrics using Factor Analysis of Information Risk (FAIR). Our last blog post explored Insider Threat associated with employee use of Large Language Models (LLMs) within organizations. This post covers the impact of generative AI on phishing.

In this blog post series, we’re examining how artificial intelligence (AI) is rapidly reshaping the threat landscape, and how to approach quantifying the resulting loss metrics using Factor Analysis of Information Risk (FAIR). Our last blog post explored Insider Threat associated with employee use of Large Language Models (LLMs) within organizations. This post covers the impact of generative AI on phishing.

Kevin Gust is a Professional Services Manager and Michael Smilanich a Risk Consultant for RiskLens.

Kevin Gust is a Professional Services Manager and Michael Smilanich a Risk Consultant for RiskLens.

An Example: Malicious External Actor Establishes Network Foothold via Phishing and Exfiltrates Customer PII.

First, it is worth noting that this scenario is not a new one. According to the Cybersecurity and Infrastructure Security Agency (CISA), over 90% of successful cyber attacks begin with a phishing email . An External Actor using phishing to establish a network foothold is one of the most common cyber risk scenarios to consider. We at RiskLens have quantified the risk of this scenario for years and will continue to do so as long as bad actors have access to email.

Since this risk isn’t new, we should reconsider our modeling approach to adjust for the rapid increase in the use of generative AI. One of the most productive uses for LLMs, such as OpenAI's ChatGPT, is for quickly generating text, and more specifically in a professional setting, emails. Give the model a prompt like: “I need help generating a courteous, professional, but not overly formal reply to an inquiry…” and it will produce a coherent response in seconds. The response might even be so well-written that you could copy and paste the text and send it to the intended recipient without editing.

This productivity hack can be beneficial for the average worker, but even more useful for a phishing scammer. According to the Wall Street Journal:

“There is little doubt that generative AI is a boon to phishing attackers, who can otherwise be tripped up by poorly worded scam emails. ChatGPT can write grammatically correct copy for them. Cybersecurity company Darktrace said in an April report that it had observed a 135% rise in spam emails to clients between January and February with markedly improved English-language grammar and syntax. The company suggested that this change was due to hackers using generative AI applications to craft their campaigns.”

Generative AI increases the sophistication of phishing attempts by making emails seem more realistic. It can create personalized messages to target specific individuals and it enables rapid creation of messages to expedite distribution. Moreover, generative AI machine-learns from past successes and failures to adapt for the future. These facts provide reason enough for us to take a fresh look at our underlying assumptions for phishing scenarios.

Webinar

Webinar

Attend a webinar: Quantifying AI Cyber Risk in Financial Terms, hosted by RiskLens, Tuesday, June 20, 2023 at 2 PM EDT.

Framing a Loss Event Scenario for FAIR Cyber Risk Analysis: Sophisticated Phishing with AI

To frame this type of incident in a way that allows us to quantify the potential loss exposure, we use FAIR scoping principles to identify a risk scenario:

- Threat: Malicious External Actor

- Effect: Confidentiality (i.e., data breach)

- Asset: “Crown Jewel” Database containing sensitive data (for example, customer PII)

- Method: Network Foothold (via Phishing)

- How many ‘true positive’ phishing emails landed in employee inboxes in the past year? (i.e., bypassed spam filters and other security tools)

- Over the past year, what is your organization’s phishing click rate? (i.e., actual clicks on malware requiring remediation, or internal phishing awareness campaigns)

For both metrics, it may be beneficial to focus on month-over-month changes in 2023 to understand if the organization has truly seen an uptick in phishing emails and/or click rate. Based on the emerging trends listed above, there has already been a 135% rise in spam emails in early 2023 and we should expect a steady increase as use of generative AI continues to grow, and adjust our modeling accordingly.

Another consideration which will influence the key drivers of Threat Event Frequency is traditional security awareness training. For example, a typical security awareness campaign encourages employees to look out for bad grammar, errors, typos, and wonky formatting in potential phishing emails. These criteria may no longer be relevant and corporate security awareness programs should evolve with the emerging threat landscape (and potentially give employees tougher tests) to more accurately evaluate organizational resilience to phishing attempts.

Next, we estimate Vulnerability (or Susceptibility - see the FAIR definitions) by evaluating the presence and efficacy of key controls:

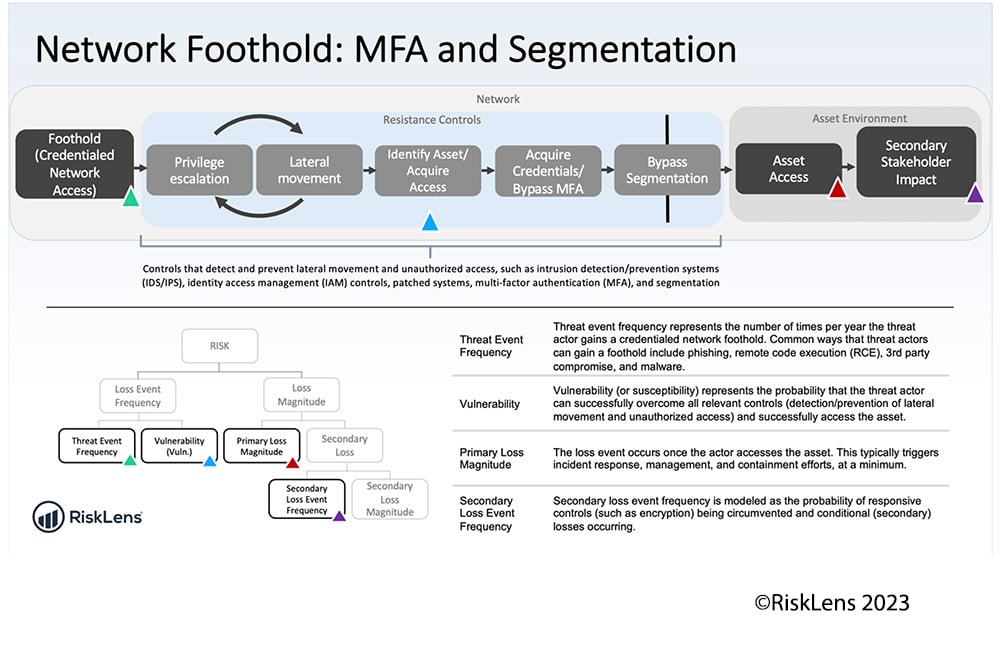

- Are controls that detect and prevent lateral movement and unauthorized access in place? Such as:

o Intrusion detection/prevention systems (IDS/IPS)

o Identity access management (IAM) controls

o Patched systems

o Multi-factor authentication (MFA)

o Segmentation

RiskLens in-platform scenario guidance, showing related FAIR factors (click for larger image):

Loss magnitude for sensitive data such as PII, PHI, and PCI is relatively straightforward to estimate based on data type and number of records using firmographic industry data (region, revenue, and industry) provided by RiskLens Data Science. Typical losses we would expect to materialize in these scenarios include:

- Primary response costs are associated with gathering the incident and computer security incident response teams along with various departments to manage an incident.

- Secondary response costs are those activities and expenses incurred while dealing with secondary stakeholders. For example, data breaches will include notifications, credit monitoring expenses, and customers choosing to participate in class-action lawsuits that require the firm and attorneys to defend against these litigations.

- Fines and judgments are losses such as a regulatory body fine, civil case judgement, or a fee based on contractual stipulations.

Conclusion: Emerging Cyber Risks in the AI-powered Future

Increased sophistication of phishing attempts is an example of how an AI-powered future can add complexity to existing cyber risk scenarios. These risks necessitate a comprehensive, risk-informed approach to AI governance, and the application of robust risk quantification models like FAIR, powered by a tool like RiskLens, to guide us in navigating this rapidly changing threat landscape. Stay tuned for more posts like this as we strive to stay on top of emerging related cyber risks and discuss approaches to quantifying their impacts.

Read our blog post series on FAIR risk analysis for AI and the new threat landscape