FAIR Cyber Risk Analysis for AI Part 4: Audio Deepfakes in Social Engineering

Over the past few months, Generative Artificial Intelligence (AI) has demonstrated some impressive capabilities. One of those is its ability to mimic human voices.

Over the past few months, Generative Artificial Intelligence (AI) has demonstrated some impressive capabilities. One of those is its ability to mimic human voices.

In April 2023, a song featuring Drake and The Weeknd went viral. Within three days, it racked up over 10 million views on TikTok and 250,000 streams on Spotify. For artists of their stature, a viral hit is nothing out of the ordinary. The difference this time is that the hit was generated by a little-known individual called Ghostwriter using AI, without the stars’ knowledge.

The blend of pop culture, music, and media exposure in this incident showcases the latest capabilities of AI to a wide audience. Aside from possible legal and ethical dilemmas, this example also raises questions of how audio deepfakes may be used for other, more nefarious, purposes.

Kevin Gust is a Professional Services Manager and Michael Smilanich a Risk Consultant for RiskLens.

Kevin Gust is a Professional Services Manager and Michael Smilanich a Risk Consultant for RiskLens.

Up to this point in our AI blog post series we have analyzed the effect of AI tools, specifically Large Language Models (LLMs), on insider threats, increased sophistication of phishing attempts, and lowering barriers to entry for hackers. This post will examine how deepfake audio can be (and already has been) used to convince victims to part with large sums of money in business email compromise (BEC) scams. We’ll also give an example of how to approach quantifying potential resulting loss metrics using Factor Analysis of Information Risk (FAIR™).

AI Deepfake Risk Scenario

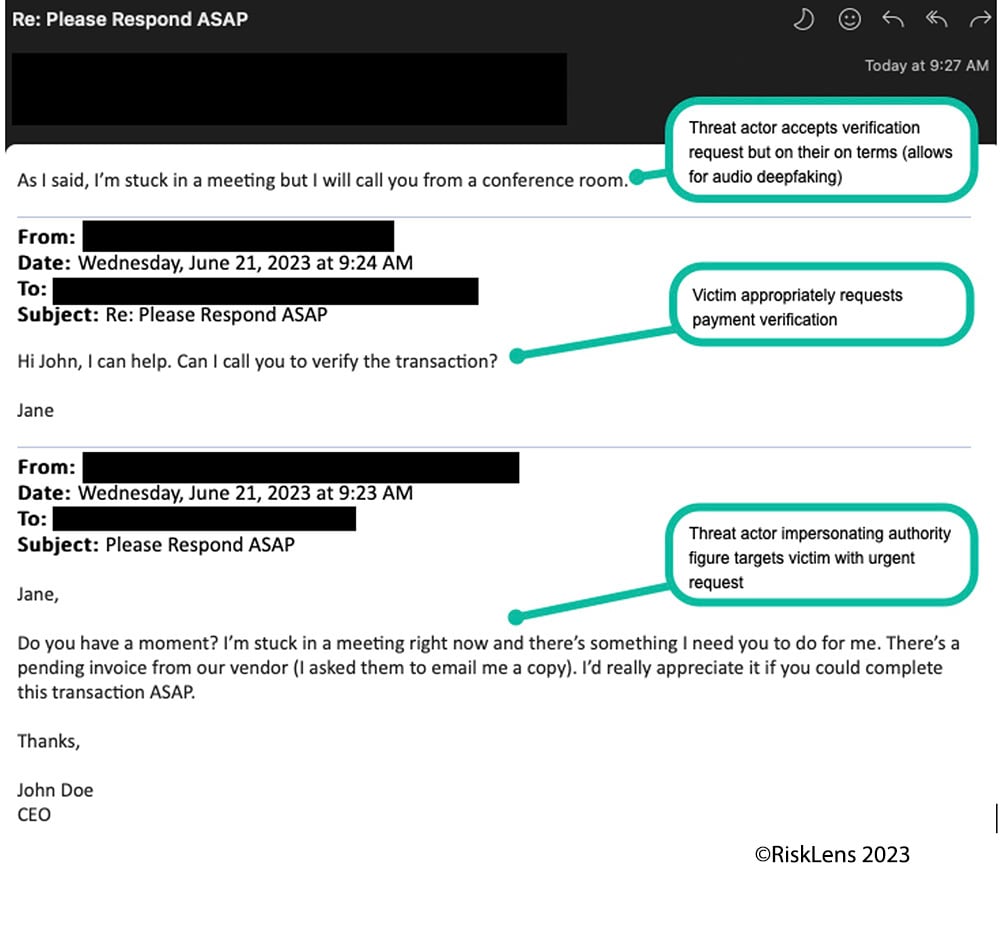

Malicious External Actor uses spear phishing to compromise an executive's email account credentials, then uses the compromised account in combination with deepfake audio to impersonate company leadership and manipulate an employee to wire funds to a fraudulent account.

Business email compromise (BEC) or vendor email compromise (VEC) are common forms of social engineering in which threat actors attempt to financially exploit businesses online. According to the FBI, “criminals send an email message that appears to come from a known source making a legitimate request, like in these examples:

- A vendor your company regularly deals with sends an invoice with an updated mailing address.

- A company CEO asks her assistant to purchase dozens of gift cards to send out as employee rewards. She asks for the serial numbers so she can email them out right away.”

Example of CEO impersonation via email account compromise followed by audio deepfake

According to the 2023 Verizon DBIR, phishing is the most common method in confirmed social engineering breaches. Once a threat actor has compromised an account via phishing, they leverage a (perceived) trusting relationship to influence a target to act.

“These attackers use a combination of strategies to accomplish this: by creating a false sense of urgency for us to provide a reply or to perform an action, a fake petition from authority, or even hijacking existing communication threads to convince us to disclose sensitive data or take some other action on their behalf.” (2023 Verizon DBIR)

Given the rapid increase in the use and quality of deepfakes generated by AI tools, these become a new weapon in the threat actor’s arsenal.

A well-trained employee can reasonably be expected to identify a suspicious request coming from a company executive or vendor. The FBI recommends the following to protect yourself from this type of attack: “Verify payment and purchase requests in person if possible or by calling the person to make sure it is legitimate. You should verify any change in account number or payment procedures with the person making the request.”

In the age of remote work, in-person verification is not always possible. If we assume that an employee takes the appropriate step to ask the supposed executive to confirm the request via phone, then an audio deepfake could be the final step in securing trust and convincing a target to act.

If you sent your CEO an email asking to verify a request via phone and received a call shortly after from a voice that sounded exactly like that individual, wouldn’t you be compelled to act?

Voice impersonation isn’t a new idea, in fact The Verge published an article in 2021 titled “Everyone will be able to clone their voice in the future.” Recent developments in technology have made it easier and more believable to produce voice clones. In fact, according to PC Mag “Microsoft’s AI Program Can Clone Your Voice From a 3-Second Audio Clip.” Under the assumption that audio deepfakes become more accurate with more training material, company executives are especially susceptible to imitation. Most public company CEOs have hours of recorded dialogue available on the internet between earnings calls, speaking engagements, and other public-facing events.

Up to this point, this may seem like an alarmist view, but there is some good news. While deepfakes are disturbing and difficult to identify, a lot of things must go right for the threat actor to achieve their goal. In the scenario above, a threat actor would need to compromise a specific executive’s email account credentials via spear phishing, build a trusted relationship with a victim, and convince that victim to wire money in what they perceive to be a legitimate business transaction. There are a lot of hoops to jump through for a loss event to occur.

Up to this point, this may seem like an alarmist view, but there is some good news. While deepfakes are disturbing and difficult to identify, a lot of things must go right for the threat actor to achieve their goal. In the scenario above, a threat actor would need to compromise a specific executive’s email account credentials via spear phishing, build a trusted relationship with a victim, and convince that victim to wire money in what they perceive to be a legitimate business transaction. There are a lot of hoops to jump through for a loss event to occur.From a loss magnitude perspective, the median amount of money stolen in these attacks is around $50,000 and “law enforcement has developed a process by which they collaborate with banks to help recover money stolen from attacks such as BEC. More than 50% of victims were able to recover at least 82% of their stolen money.” (2023 Verizon DBIR)

While there have been examples of larger frauds, like this instance of a company in the United Arab Emirates losing $35 million due to a combination of forged emails and deepfake audio, this appears to be the exception, rather than the norm.

Another reason for optimism is that some voice AI companies, such as ElevenLabs are starting to offer free tools to help identify whether audio clips were created using AI. The speech identifier is tailored to recognize voice AI specifically from ElevenLabs and does not make any promises to identify audio generated by other AI products. In addition, a victim may not have the wherewithal to use such a tool in the middle of a scam. Nevertheless, the availability of a tool like this is a step in the right direction.

Framing a Loss Event for FAIR Cyber Risk Analysis - Audio Deep Fakes in Social Engineering

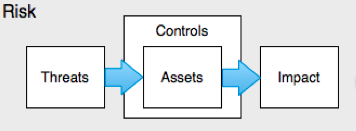

To frame this type of incident in a way that allows us to quantify the potential loss exposure, we use FAIR scoping principles to identify a:

- Threat: Malicious External Actor

- Effect: Direct Financial Loss

- Asset: Corporate Funds

- Method: Social Engineering (Phishing > BEC > Deepfake Audio Impersonation)

Estimating Threat Event Frequency (TEF) is a challenge in a multi-step attack chain. As a starting point, I would consider the “network foothold via phishing” scoping guidance provided in this blog post.

Now that you have a starting point, here are some things you might consider in your estimates for this scenario:

- An attacker would have to compromise a specific executive’s email account credentials (rather than any employee account).

- If available, focus on data points related to executives such as the number of ‘true positive’ phishing emails received by executives and phishing click rate for executives over the past year.

- Our assumption is that TEF for this specific scenario would be lower than a general phishing scenario due to the factors above.

Estimating Vulnerability also requires a change in assumptions. For a general phishing scenario, we recommend reviewing the presence and efficacy of controls in place to detect and prevent lateral movement and unauthorized access.

For this scenario, we assume that TEF represents the frequency with which a threat actor will compromise an executive’s email account credentials. Therefore, we should evaluate our susceptibility to falling victim to fraud once that account is compromised. Some things to consider include:

- Which individuals or groups within the organization are likely to be targeted based on job responsibilities and access to financial accounts?

o Are these people trained to recognize suspicious communication and requests?

- Are there standard verification processes in place that are likely to prevent this type of attack?

- Is more than one person involved in authorizing a request to wire money?

o Are there multiple levels of approval, separation of duties, or other controls?

Typical losses we would expect to materialize in this scenario include:

- Primary response costs such as investigation, forensics, gathering the incident and computer security incident response teams along with various departments to manage the incident.

- Primary replacement costs represent the amount of funds lost in the case of fraud. Given the examples listed earlier in this post, this could be a wide range depending on your organization.

- We would not anticipate secondary losses in this scenario.

Conclusion: Quantifying AI Risk Drives Better Business Decisions

As the use of AI becomes increasingly ubiquitous, it is important to consider the potential risks that accompany this societal shift. This post provides one example of how threat actors can use audio deepfakes to defraud companies. There are other potential loss events involving audio and other kinds of deepfakes which could be analyzed, not to mention macro-level concerns which could have a broader impact on society. Our objective with this series about AI risk is to move away from fear, uncertainty, and doubt to tangibly demonstrate how AI impacts cyber (business) risk and how we can quantify that risk to help drive better decision making.

Read our blog post series on FAIR risk analysis for AI and the new threat landscape